As cloud-based Enterprise Content Management (ECM) solutions become more popular, companies are aiming to replace their on-premise ECM systems with cloud-based ones. This gives notable benefits such as lower costs, higher scalability, and increased productivity. It may seem daunting for companies to migrate all their content to the cloud, but by following ETL, the process can be done as efficiently as possible.

ETL

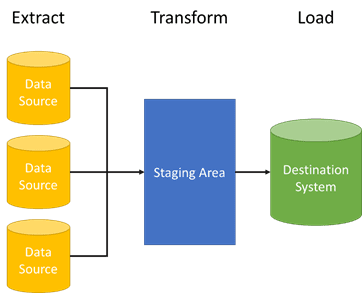

ETL, which stands for Extract, Transform, and Load, is a common process used in data integration. It describes the process of extracting data from one or more sources, transforming the data to fit the requirements of the destination system, and then finally loading it to the destination. Even though ETL is most associated with data integration, its steps can still be followed to migrate content from one ECM to another.

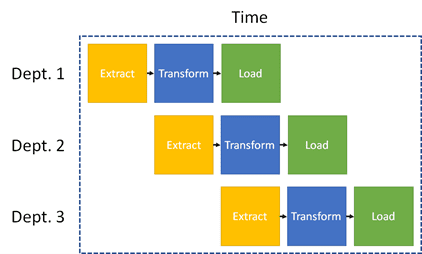

Additionally, each phase of ETL can be performed in a pipeline. Pipelining is a technique where a sequence of instructions are performed in parallel with other sequences, saving time and increasing efficiency.

For example, let’s have three departments that want to migrate their content. Once the first department finishes extraction, it will move on to transforming. Once the first department starts transforming, the second one will start extraction. Afterwards, the second department will start transforming while the third department starts extraction. All three departments will have different phases executing concurrently.

Extract

The first stage is extracting the content from the source ECM. Afterwards, the extracted data is consolidated into a staging area.

The staging area is the storage area that lies between the source and destination ECMs. It serves to hold the data to process in the Transform stage. This is to make sure none of the source data is affected during the ETL process. Additionally, this lets us validate the extracted data. For example, we can find which data failed to extract.

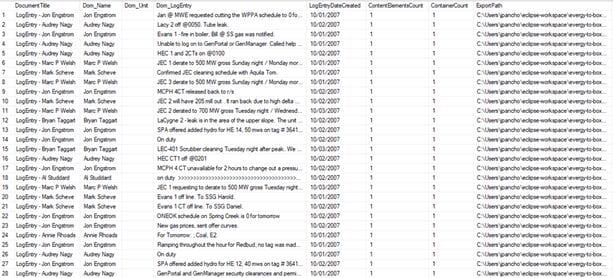

To extract content from the source ECM, its API will be used to extract the files and metadata. The files will be stored inside a drive in the staging area. For metadata, they will be stored in CSV files or in a database. For each row in the CSV file or database table, it will associate an extracted file along with its metadata values.

Transform

The second stage is transforming the data held in the staging area to fit the requirements of the destination ECM for loading. At this stage, a series of operations may be executed on the files and metadata. Some notable ones include:

- Cleaning unsupported values: A file in the source ECM may have special characters in their name that cannot be used in the destination ECM. This is remedied by removing or replacing the special characters. The same thing can be done for metadata values with unsupported characters as well.

- Filtering content: Some content may not be selected when migrating. For example, files marked for deletion should not be migrated if they will end up getting deleted anyway.

- Using a new folder structure: The structure of the previous ECM may not be compatible with the destination ECM. Alternatively, a new folder structure is used to increase productivity.

- Changing metadata value formats: A date value in the source ECM may have a different format than the date values in the destination ECM. They must be converted to match.

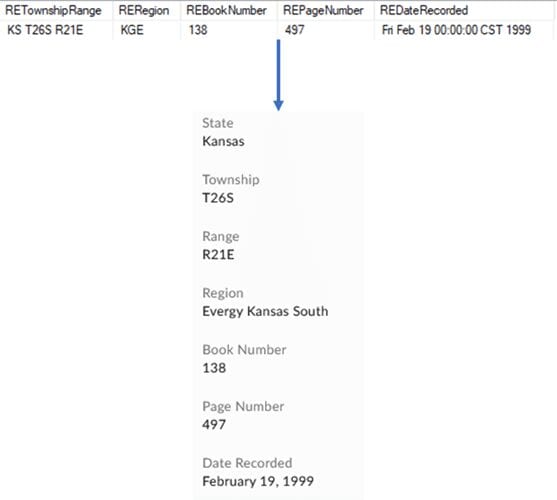

- Mapping metadata values: Metadata values in the source ECM may be represented differently in the destination ECM but have the same meaning. For example, the value TX is mapped to Texas.

- Splitting a value into multiple columns: A single metadata value may be split into different values in the source ECM. For example, an address value can be split into street, city, and zip code values.

Load

The last stage is loading all the extracted data from the staging area into the destination ECM. There are two methods of loading data:

- Full load: All the data is loaded in a single run. The recently migrated data will overwrite any existing data.

- Incremental load: Data is loaded incrementally in multiple runs. Each incremental update leaves the previously loaded data untouched.

Which method is the best one for migrating content? It depends. For full loads, it’s best to do it when only a small amount of content needs to be migrated. The risk of failure increases for larger sets of content to migrate which means the whole operation will have to be restarted, overwriting previously migrated data. It’s better to do an incremental load for larger sets of content. For example, a large set of content is split into multiple batches. Afterwards, we can run each batch separately. That way, if a failure occurs, it will only affect a small set that will have to be re-migrated.

To load the extracted content into the destination ECM, the ECM’s API will be used to upload the files. Afterwards, the metadata values of the associated file will be read and then applied to the uploaded file with a separate API call.

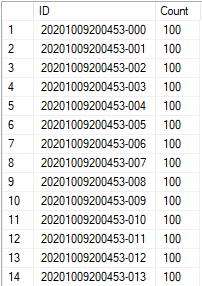

In a recently wrapped up migration project, we executed several incremental loads across multiple lines of businesses. We split the extracted content into hundreds of batches and assigned unique IDs to each batch. Unique IDs help us keep track of the migration progress and with identifying any batches with failed uploads.

Reconciliation

After loading everything into the destination ECM, the migrated and source content are reconciled to ensure the content was migrated correctly. One way of reconciling is verifying the file integrity between the migrated and source content by generating hashes and comparing them.

Conclusion

With the rising popularity of cloud-based ECM solutions, there has never been a better time to make your move to the cloud and reap the benefits. While it may seem intimidating to migrate all your content, by following the series of steps from ETL, it won’t have to be.

Stay tuned for more blogs where we will discuss previous migration projects we did with our clients and the details of ETL between specific ECMs.

Interested in moving your content to the cloud? Contact us here!